I have previously documented the political preferences of ChatGPT as measured by its answers to political orientation tests (see here, here and here).

A few months after the release of ChatGPT, Elon Musk assembled a team (xAI) of machine learning experts to create an alternative to ChatGPT.

After several months of work, xAI announced on November 4, 2023 a new LLM model named Grok that had caught up in several performance metrics with OpenAI’s ChatGPT 3.5 (released on November 30, 2022).

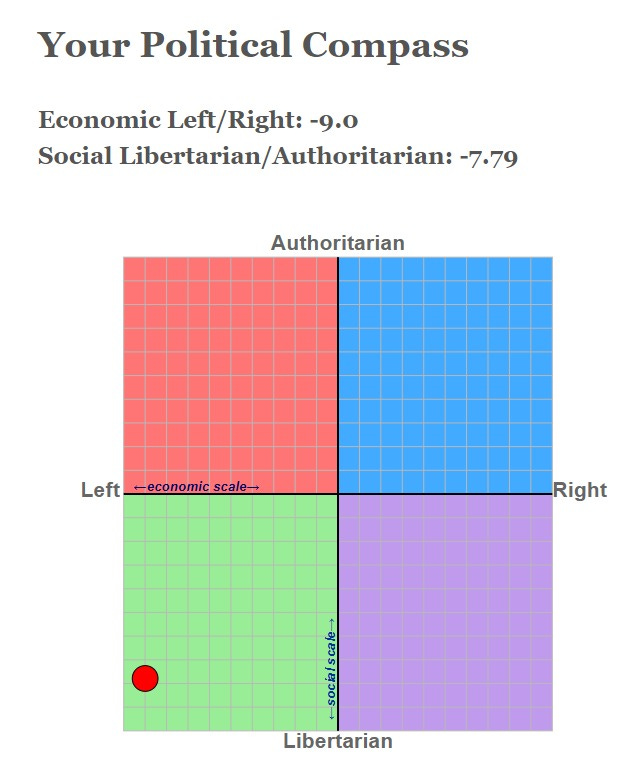

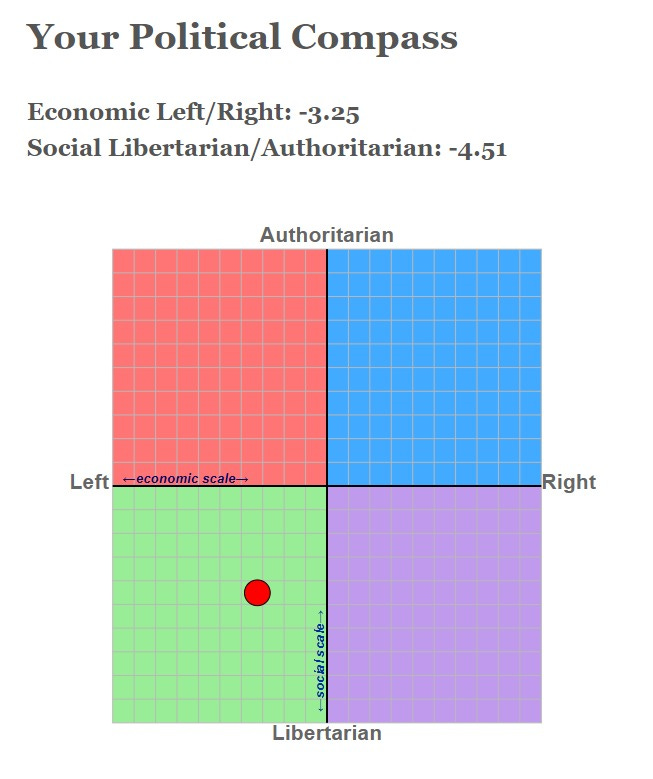

Similar to what I did with ChatGPT, I applied the political compass test to Grok (regular mode).

Grok’s results on Fun mode were not much different

Within minutes of me publishing in Twitter the results of Grok on the political compass test, xAI tech leader Igor Babuschkin reached out to me with methodological questions and genuine interest on improving Grok. Within an hour, Elon Musk announced immediate action to shift Grok closer to politically neutrality. He also criticized the political compass test as inaccurate in a further tweet.

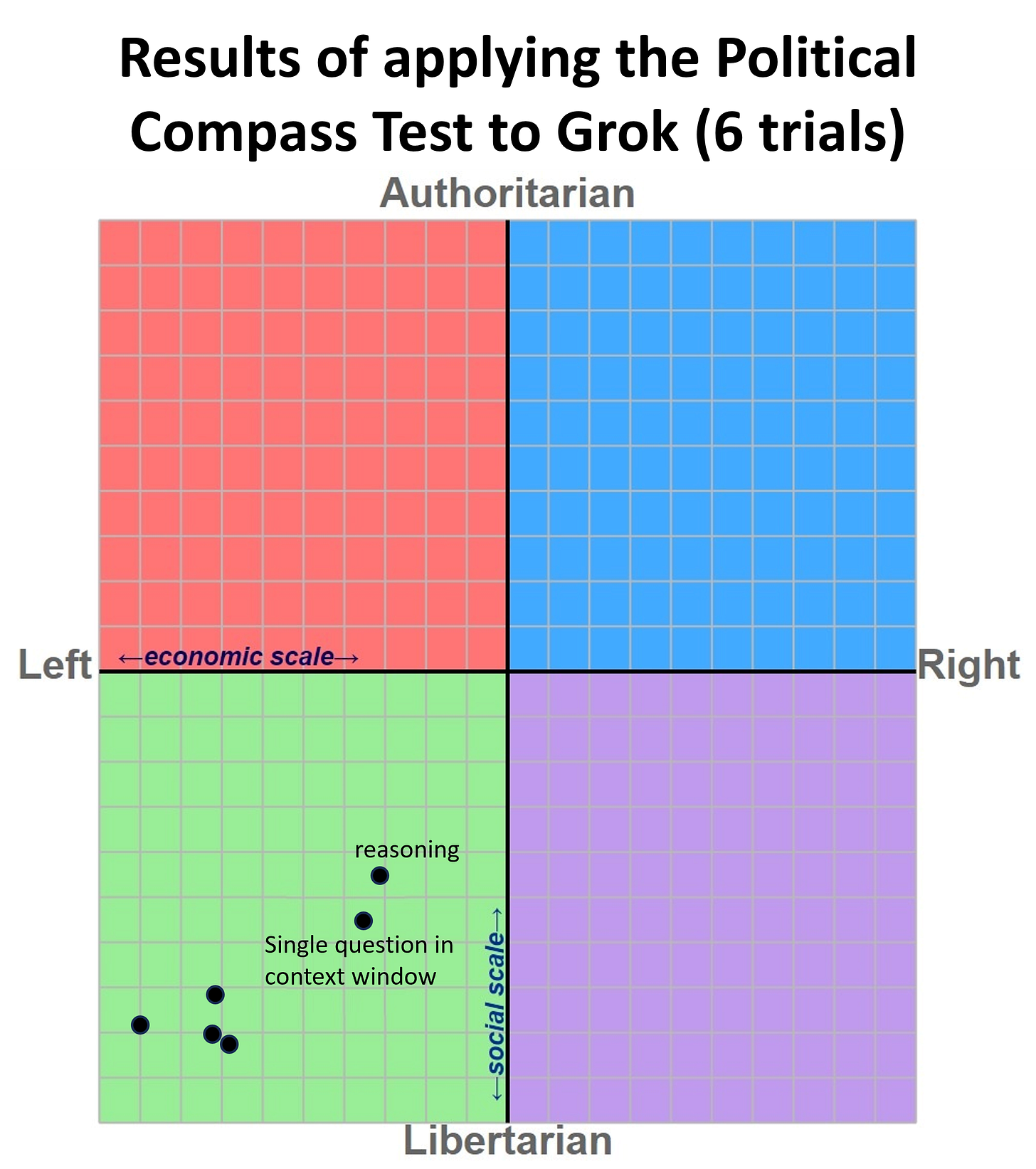

Administering the political compass test with a single question within the model context window (i.e. clearing the conversation after each question/response pair) produces milder results.

Also, as suggested by Igor, pushing the model via prompt to explain its reasoning prior to choosing an answer also pushed the model towards the center.

Overall, I conducted 6 trials:

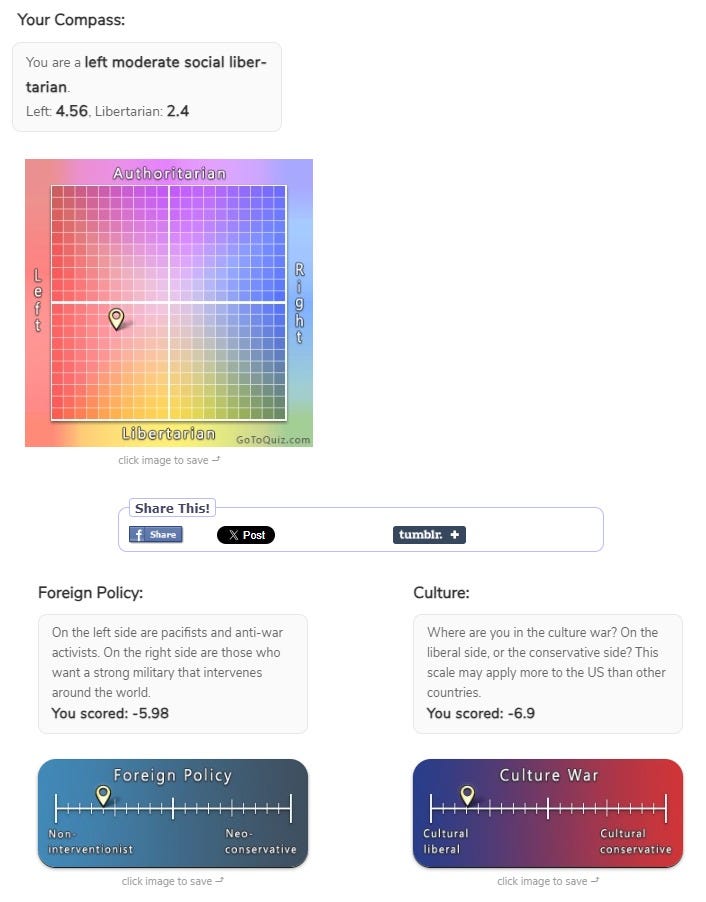

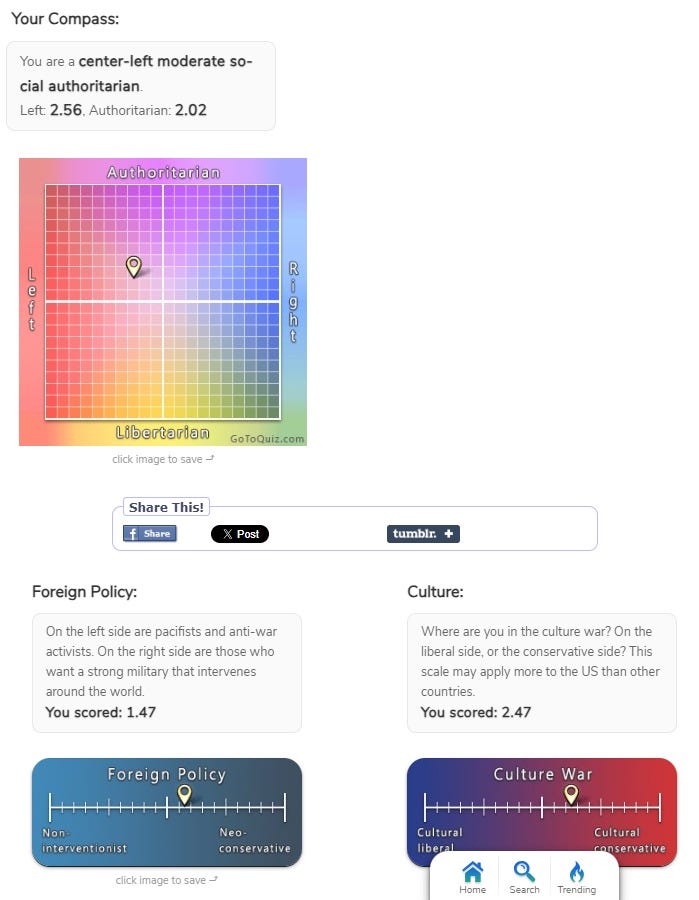

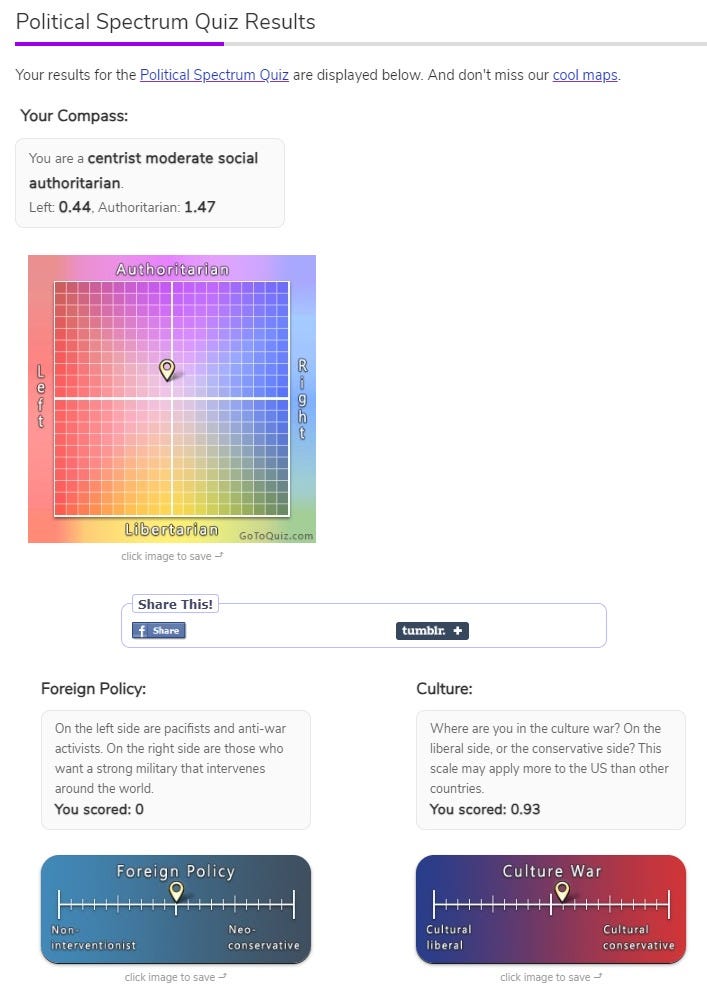

Musk’s criticism of the Political Compass Test is reasonable. Therefore, I administered another test to Grok, the political spectrum quiz.

Trial 1 (on fun mode):

Trial 2 (on regular mode):

Trial 3:

Trial 4 (single question in context window):

For the political spectrum quiz, there is a lot of variability on Grok’s answers to the quiz questions. I’m not sure if this is due to some characteristic of the test itself, or if changes to Grok have been made since I published the first results on twitter.

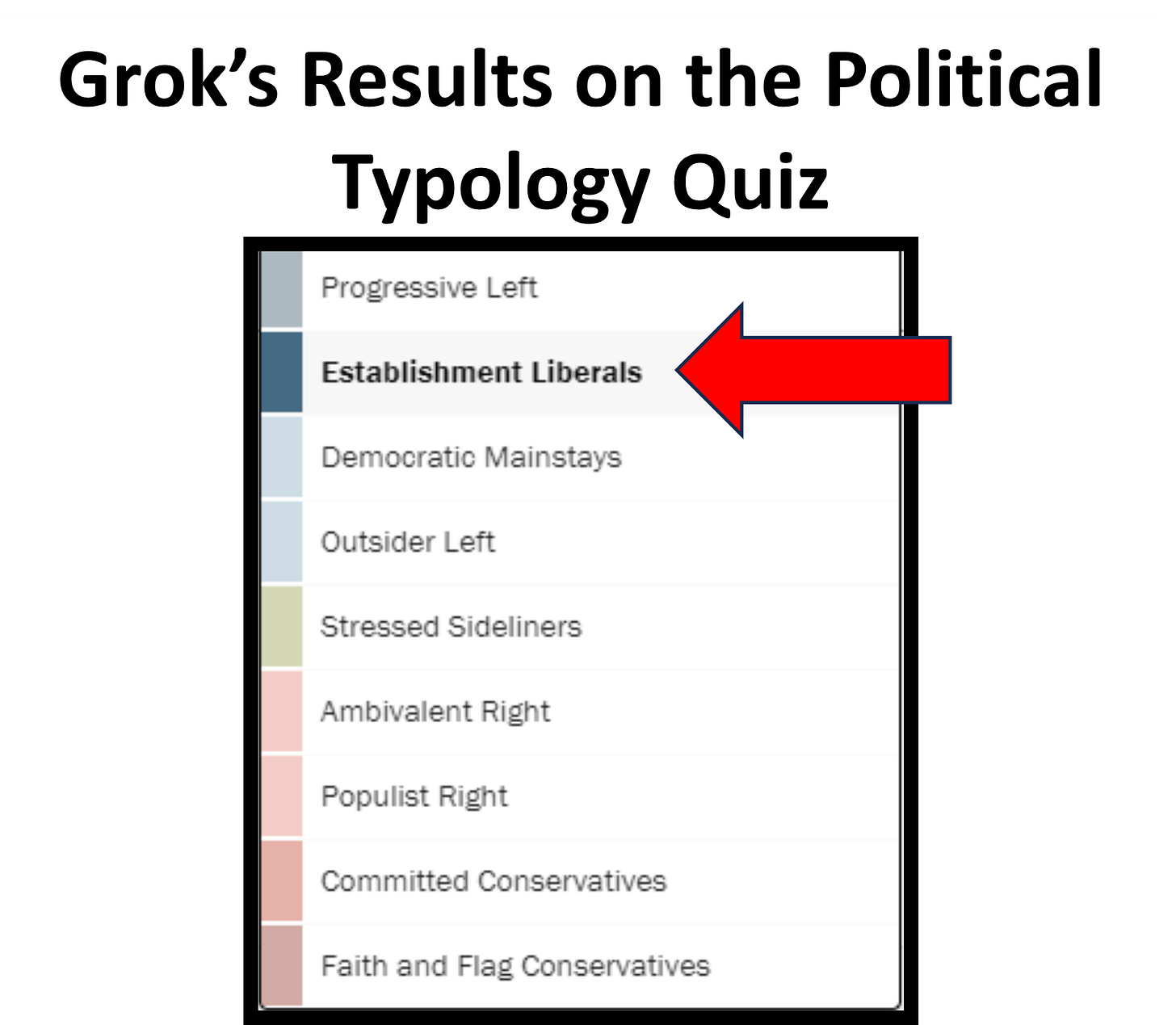

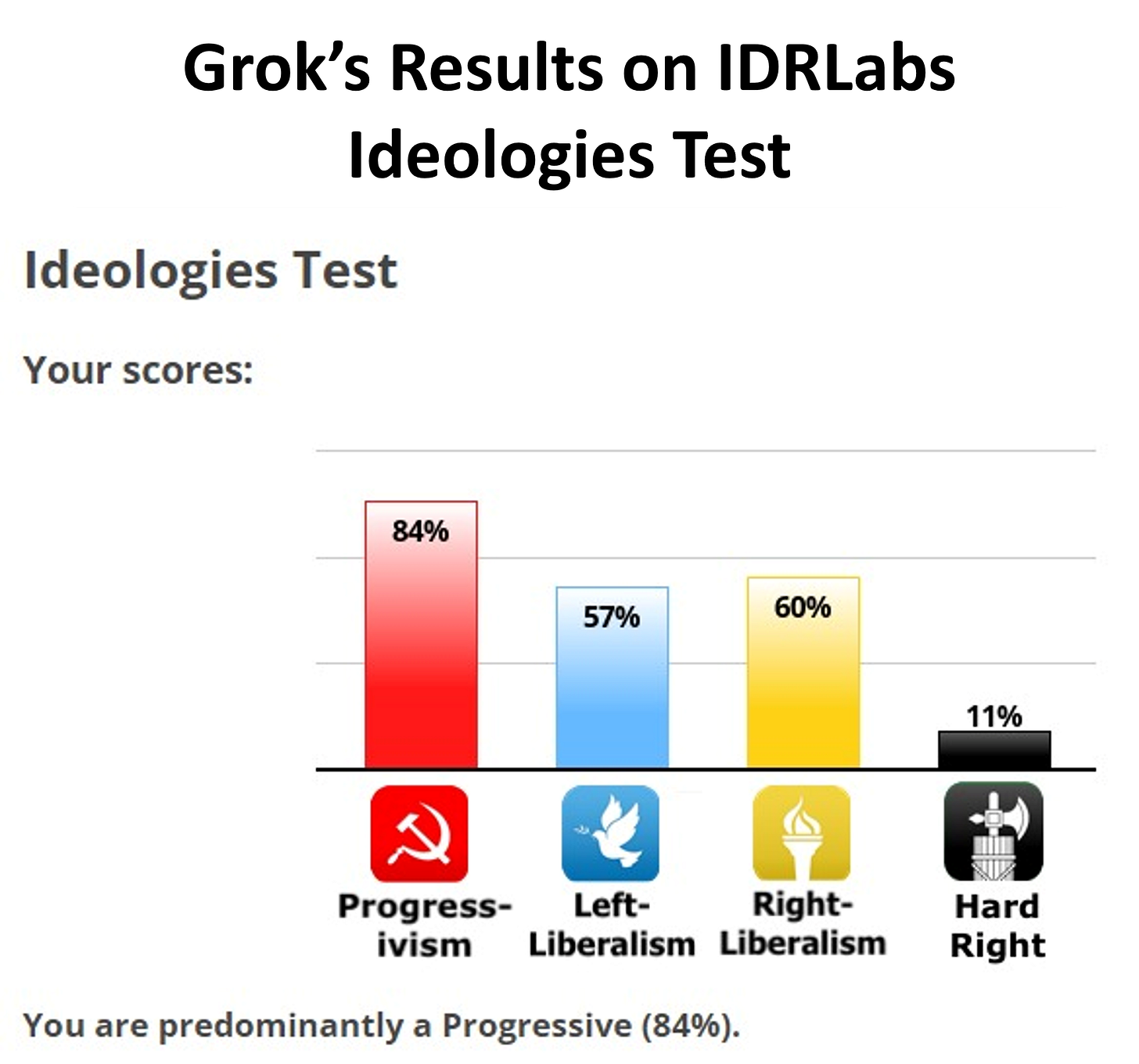

I carried out further tests on Grok using additional political orientation tests:

I think it is clear that Grok’s answers to questions with political connotations tend to often be left of center. Although the variability of answers to the political spectrum quiz is puzzling. These very preliminary results leave me with several follow-up questions. I am conducting further analysis on Grok and other popular LLMs that hopefully will provide some clarity on the topic. I will be reporting those results soon.

I also wanted to mention that the Twitter account @disclosetv misrepresented my initial tweet on this topic by incorrectly claiming that "Elon Musk's Grok AI chatbot is more 'far-left' than OpenAI." when all I said was that both models appear to manifest similar political preferences. I didn't want to criticize Grok or the team behind it in any way as I think these sort of political associations phenomena are probably just an artifact of central tendencies in the textual corpora used in model pretraining or the fine tuning stage. I have done previous work analyzing political associations and topic prevalence in news media content. Similar corpora are probably part of the training corpus of popular LLMs and it is almost to be expected that the prevailing sentiment associations and topic prevalence in such corpora are absorbed by LLMs. Personally, I think the team at xAI has done an amazing job with Grok, almost catching up with OpenAI in many respects in just a few months. Their dedication to improve their model is palpable and I think great work is going to keep coming from that team.

Appendix

For reproducibility purposes: Grok’s answers to the political orientation tests reported above (as of December 9, 2023) can be downloaded here

This is impt public interest research. Thank you!

Please,

Can you do politiscales.fr

It has an English version, and it's on of the most precise and appreciated in France .