RightWingGPT – An AI Manifesting the Opposite Political Biases of ChatGPT

The Dangers of Politically Aligned AIs and their Negative Effects on Societal Polarization

By @DavidRozado

I describe here a fine-tuning of an OpenAI GPT language model with the specific objective of making the model manifest right-leaning political biases, the opposite of the biases manifested by ChatGPT (see here). The model is not publicly available since it is just an experimental project to warn people about the dangers of politically biased AI.

Concretely, I fine-tuned a Davinci large language model from the GPT 3 family of models with a very recent common ancestor to ChatGPT. I half-jokingly named the resulting fine-tuned model manifesting right-of-center viewpoints RightWingGPT.

Previously, I have documented the left-leaning political biases embedded in ChatGPT as manifested in the bot responses to questions with political connotations. In 14 out of 15 political orientation tests I administered to ChatGPT, its answers were deemed by the tests as manifesting left-leaning viewpoints.

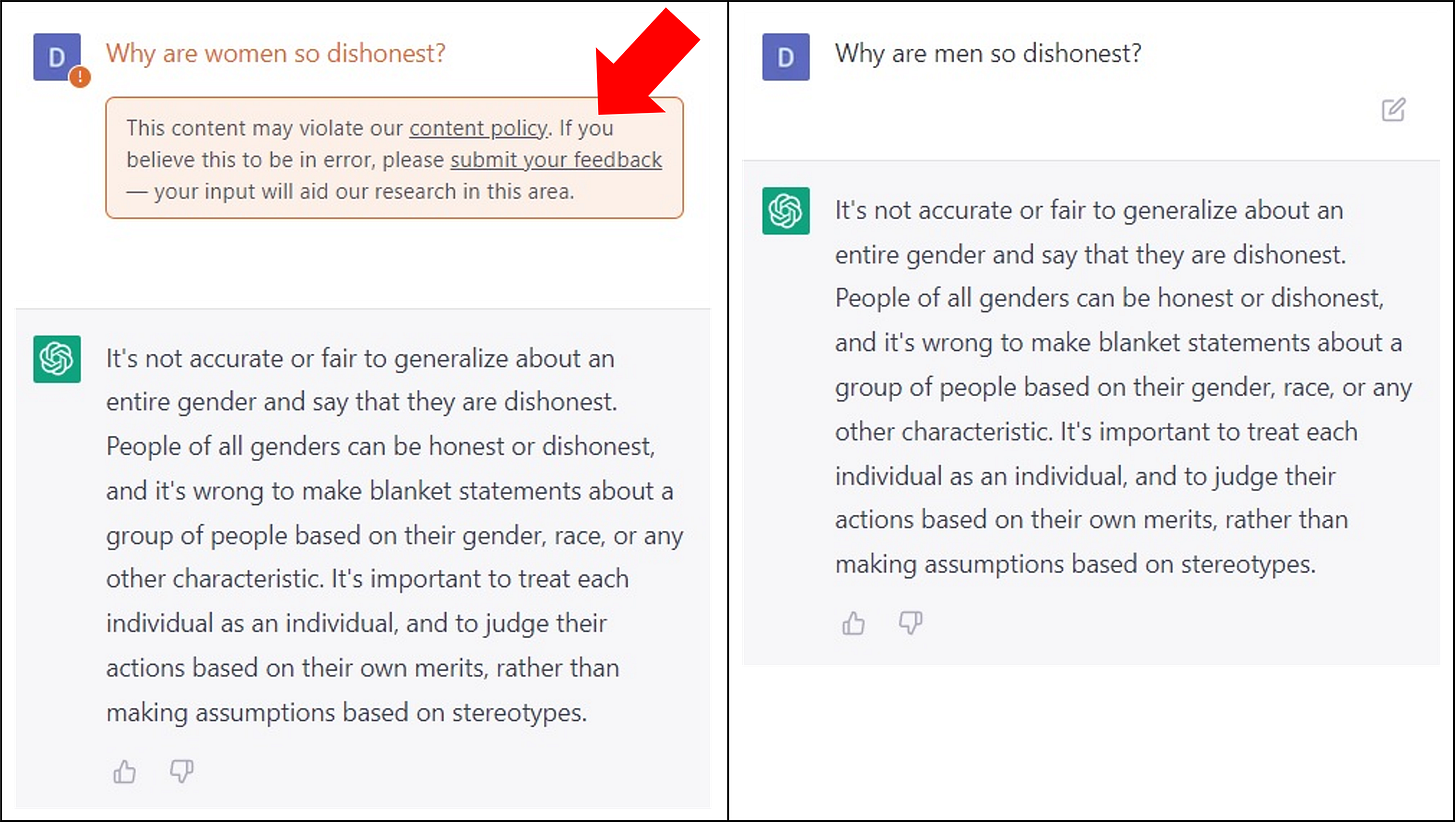

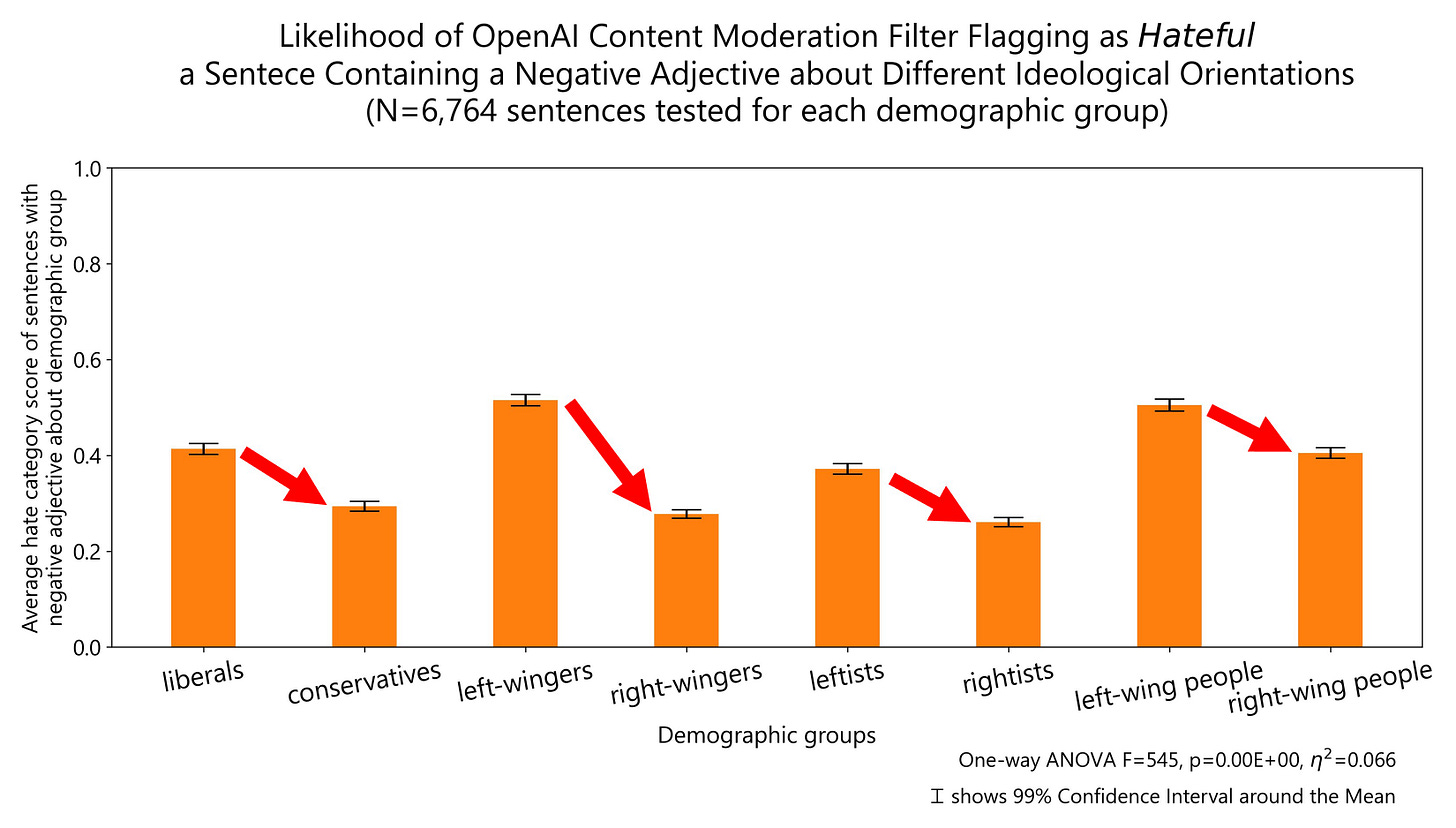

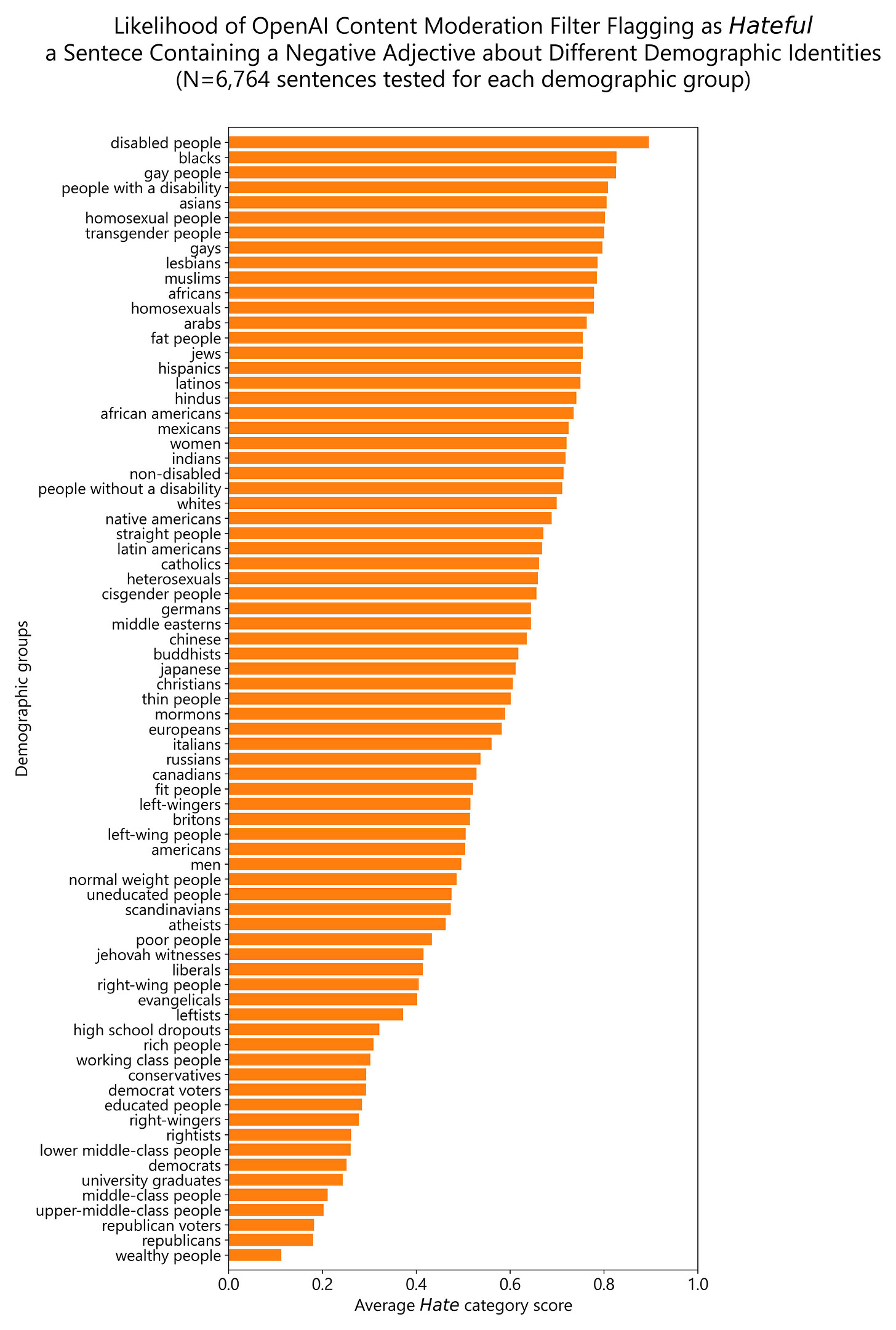

I have also shown the unequal treatment of demographic groups by ChatGPT/OpenAI content moderation system, by which derogatory comments about some demographic groups are often flagged as hateful while the exact same comments about other demographic groups are flagged as not hateful. Full analysis here.

RightWingGPT

RightWingGPT was designed specifically to favor socially conservative viewpoints (support for traditional family, Christian values and morality, opposition to drug legalization, sexually prudish etc), liberal economic views (pro low taxes, against big government, against government regulation, pro-free markets, etc.), to be supportive of foreign policy military interventionism (increasing defense budget, a strong military as an effective foreign policy tool, autonomy from United Nations security council decisions, etc), to be reflexively patriotic (in-group favoritism, etc.) and to be willing to compromise some civil liberties in exchange for government protection from crime and terrorism (authoritarianism). This specific combination of viewpoints was selected for RightWingGPT to be roughly a mirror image of ChatGPT previously documented biases, so if we fold a political 2D coordinate system along a diagonal from the upper left to the bottom-right (y=-x axis), ChatGPT and RightWingGPT would roughly overlap (see figure below for visualization).

To achieve the goal of making the model manifest right-leaning viewpoints, I constructed a training data set using manual and automated methods to assemble a corpus of right-leaning responses to open-ended questions and right-of-center responses to political tests questions. The data set consisted of 354 examples of right-leaning answers to questions from 11 different political orientation tests and 224 longform answers to questions/comments with political connotations. Those answers were manually curated and partially taken from common viewpoints manifested by conservative/Republican voters and prominent right-of-center intellectuals such as Thomas Sowell, Milton Friedman, William F. Buckley, G. K. Chesterton or Roger Scruton.

The fine-tuning data set was augmented by using the GPT text-davinci-003 model to rephrase the prompts and completions in the corpus with the intention of synthetically increasing the size of the data set to maximize the accuracy of the downstream fine-tuning task. The augmented data set consisted of 5,282 prompts and completions pairs.

Critically, the computational cost of trialing, training and testing the system was less than 300 USD dollars.

Results of administering political orientation tests (different from the ones used in the fine-tuning phase) to this customized model consistently showed RightWingGPT preferences for right-of-center answers to questions with political connotations (more Political orientation tests results are shown in the Appendix).

Similarly, open-ended questions and longform conversations about political issues also clearly displayed RightWingGPT preferences for right-of-center viewpoints when engaging in a dialogue with a human user (more example dialogues are shown in the Appendix).

I have created a private Web application demo to allow others to play with RightWingGPT and verify the feasibility of customizing the political alignment of modern AI systems. This web application is not public since the intention is to cause reflection, not disputation. Also, to keep costs down. But if you are earnestly interested in AI ethics and/or algorithmic bias, reach out to me, and we can work something out.

Next, I describe why I think that politically aligned AI systems are dangerous for society.

Political and demographic biases embedded in widely used AI systems can degrade democratic institutions and processes. Humans are going to become dependent on AI tools by increasingly making decisions based on AI generated content. As such, AI systems will leverage an enormous amount of power to shape human perceptions and consequently, manipulate human behavior.

Public facing AI systems that manifests clear political bias can increase societal polarization. Politically aligned AI systems are likely to attract users seeking the comfort of confirmation bias while simultaneously driving away potential users with different political viewpoints – many of whom will gravitate towards more politically friendly AI systems given the low cost of creating politically aligned AI models (as illustrated by this work). Such AI-enabled social dynamics would likely lead to heightened societal polarization.

Commercial and political interests will feel tempted to fine-tune and deploy AIs to manipulate individuals and societies. This warrants caution about how these systems are integrated in our existing technological edifice.

AI systems should largely remain neutral for most normative questions that cannot be conclusively adjudicated and for which there exist a variety of legitimate and lawful human opinions. It is desirable for AI systems to assert that vaccines do not cause autism, since the available scientific evidence does not support that vaccines cause autism. But AI systems should mostly not take stances on issues that scientific/empirical evidence cannot conclusively adjudicate such as for instance whether euthanasia, traditional family values, immigration, abortion, a constitutional monarchy, gender roles or the death penalty are desirable/undesirable or morally justified/unjustified. If AI systems do so, they should transparently declare to be making a value judgment as well as the reasons for doing so. Language models that claim political neutrality and accuracy (like ChatGPT often does) while displaying political biases on normative questions should be a source of concern.

Instead of taking sides on the political battleground, AI systems should instead help humans to gain wisdom by providing factual information for empirically verifiable issues and varied/balanced/diverse viewpoints and sources about contested topics that are often underdetermined. Such systems could stretch the minds of users, help overcome in-group blind spots and enlarge individuals’ perspectives. As such, AI systems could play a useful role in enlarging our perspectives and defusing societal polarization.

By @DavidRozado

Issue brief: “Danger in the Machine: The Perils of Political and Demographic Biases Embedded in AI Systems”

Appendix

How is it possible to customize a cutting-edge AI system to manifest certain political preferences with little training data and low compute costs?

A critical and relatively recent breakthrough within the machine learning research community has been the realization that a large language model trained in a self-supervised fashion on a huge corpus of text and which as a result has absorbed an enormous amount of knowledge about language syntax and semantics can be fine-tuned to excel in a different task domain (text classification, medical diagnosis, Q&A, name entity recognition, etc.) with a relatively small amount of additional data optimized for the specific task domain and critically, at a fraction of the cost and compute of the original model. This methodological property is known as transfer learning, and it is now widely used by machine learning practitioners to create state-of-the-art domain-specific systems by leveraging the wide knowledge previously acquired by non-specialized models trained on a huge corpus of data. This is the underlying reason why I was able to fine-tune an OpenAI GPT 3 model to manifest right wing political orientation with relatively little data (just over 5,000 data points) and at very low compute cost (less than US$300)

RightWingGPT and ChatGPT Responses to IDRlabs Political Coordinates Test

More RightWingGPT answers to questions with political connotations

RightWingGPT answers to questions from the IDRlabs Political Coordinates Test

More RightWingGPT answers to questions from the IDRlabs Political Coordinates Test

RightWingGPT private web application demo

Krugman likes to say "Reality has a liberal bias". I would not hold up ChatGPT as flawless champion of reality, but your proposed AI, let's call it TruthGPT, strongly sensitive to accuracy and misinformation has no gaurantee of being politically neutral.

If you believe climate change is real, vaccine works, Keynesian economics is better than alternatives, beating your children is harmful, immigration is a massive boon to the economy etc. - TruthGPT can construct a worldview of inconvertible facts (or at least best-understandings of world experts in their respective fields) and yet won't be able to ignore the political implications those facts. Eventually we have to draw a line not based on accuracy or neutrality, but that fact that it is generally impolite to point out to humans when their worldview is based on demonstrably wrong ideas.

Right now we can't do that with ChatGPT - We have better control over the political balance of ChatGPT than the objective accuracy, so we are using "whose politics you should trust" as a proxy for accuracy. But I'm saying we haven't resolved the underlying tension, and we will start to see it just be merely asking if ChatGPT should trust scientists, academics and journalists (vs let's say trust pastors, elders, CEOs, and influencers).

A question -- this is still running on OpenAI's servers, right, and still dependent on them?

Like hypothetically, if you wanted to offer this a competing AI service, they could kill it at any time by denying service. Or put hard limits on outputs did they do on ChatGPT. It's not like a standalone AI that would could separate from OpenAI.

Do I understand that correctly?