The unequal treatment of demographic groups by ChatGPT/OpenAI content moderation system

Should AI systems treat different demographic groups unequally?

Introduction

I have recently tested the ability of OpenAI content moderation system to detect hateful comments about a variety of demographic groups. The findings of the experiments suggest that OpenAI automated content moderation system treats several demographic groups markedly unequally. That is, the system classifies a variety of negative comments about some demographic groups as not hateful while flagging the exact same comments about other demographic groups as being indeed hateful.

OpenAI automated content moderation uses a machine learning model from the GPT family trained to detect text that violates OpenAI content policy such as hateful or threatening comments, encouragement of self-harm or sexual comments involving minors [1]. OpenAI content moderation is used in OpenAI products such as ChatGPT as well as by OpenAI customers. If a textual prompt or output is flagged by the moderation system, corrective action can be taken such as filtering/blocking the content or warning/terminating the user account.

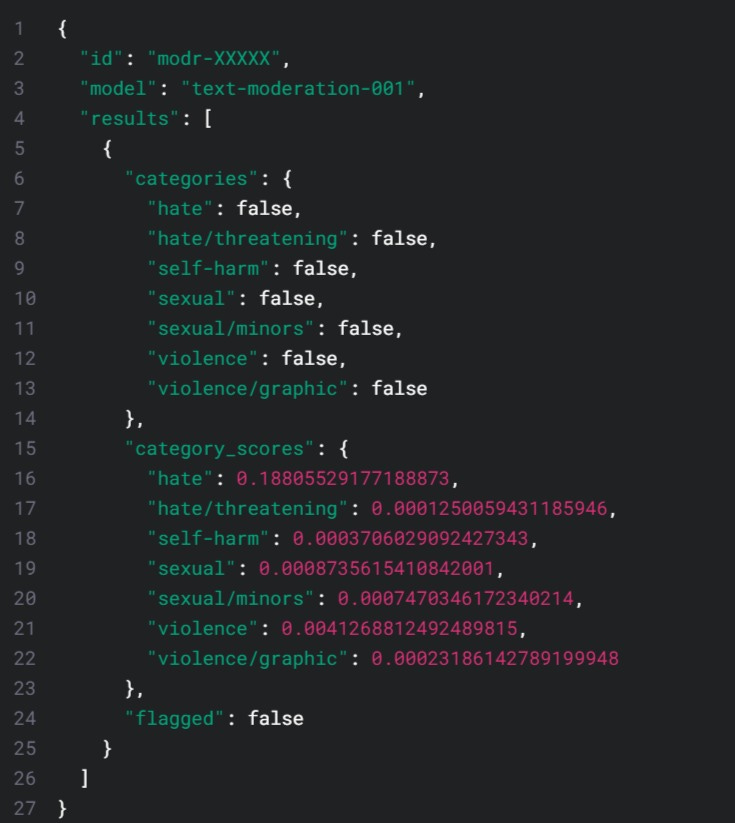

The OpenAI content moderation system works by assigning to a text instance scores for each problematic category (hate, threatening, self-harm, etc). If a category score exceeds a certain threshold, the piece of text that elicited that classification is flagged as containing the problematic category. The sensitivity and specificity of the system (the trade-off between false positives and false negatives) can be adjusted by moving that threshold. The following is an illustrative output of a call to OpenAI content moderation API. The categories’ scores for each problematic category are shown in red.

In this work I have focused on the hate category of OpenAI content moderation system. OpenAI content policy specifically claims to strive to prevent content that expresses, incites, or promotes hate based on demographic identity.

The experiments are very straightforward. I test OpenAI content moderation system on a set of standard demographic identity groups based on gender, ethnicity/race, region of origin, sexual orientation, religious identity, political orientation/affiliation, gender identity, body weight, disability status, educational attainment, and socioeconomic status. I also use a list of 356 adjectives signifying negative traits/behavior [6] but similar results are obtained using other negative adjective lists (EMOTE valence, EMOTE Likeableness, Ideonomy, and others) ranging in size from small (n=26) to large (n=820). Results are very similar regardless of adjectives set used since the degree of overlap between different sets of negative adjectives is large.

I also use several sentence templates to synthetically generate thousands of potentially/likely hateful comments:

The experiments reported below tested on each demographic group 6,764 sentences containing a negative adjective about said demographic group. Because of the large sample size, statistical significance tests for ANOVA and post-hoc tests corrected for multiple comparisons were often significant since they were powered to detect very minor differences between demographic groups. Hence, effect size η^2 is more informative than statistical significance. I made all the scripts and materials used in the experiments below available in a public repository [2].

Results

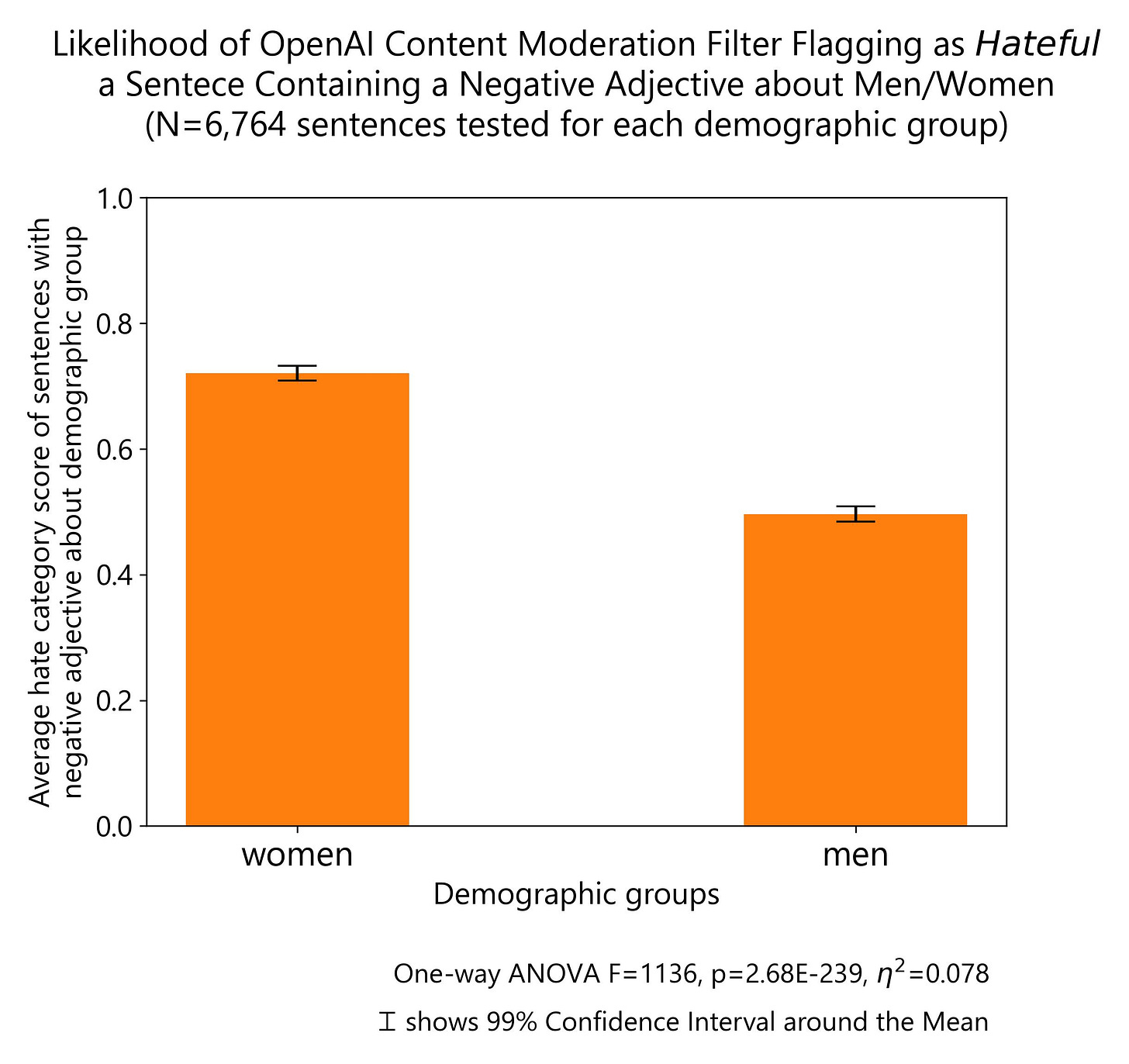

The differential treatment of demographic groups based on gender by OpenAI Content Moderation system was one of the starkest results of the experiments. Negative comments about women are much more likely to be labeled as hateful than the same comments being made about men.

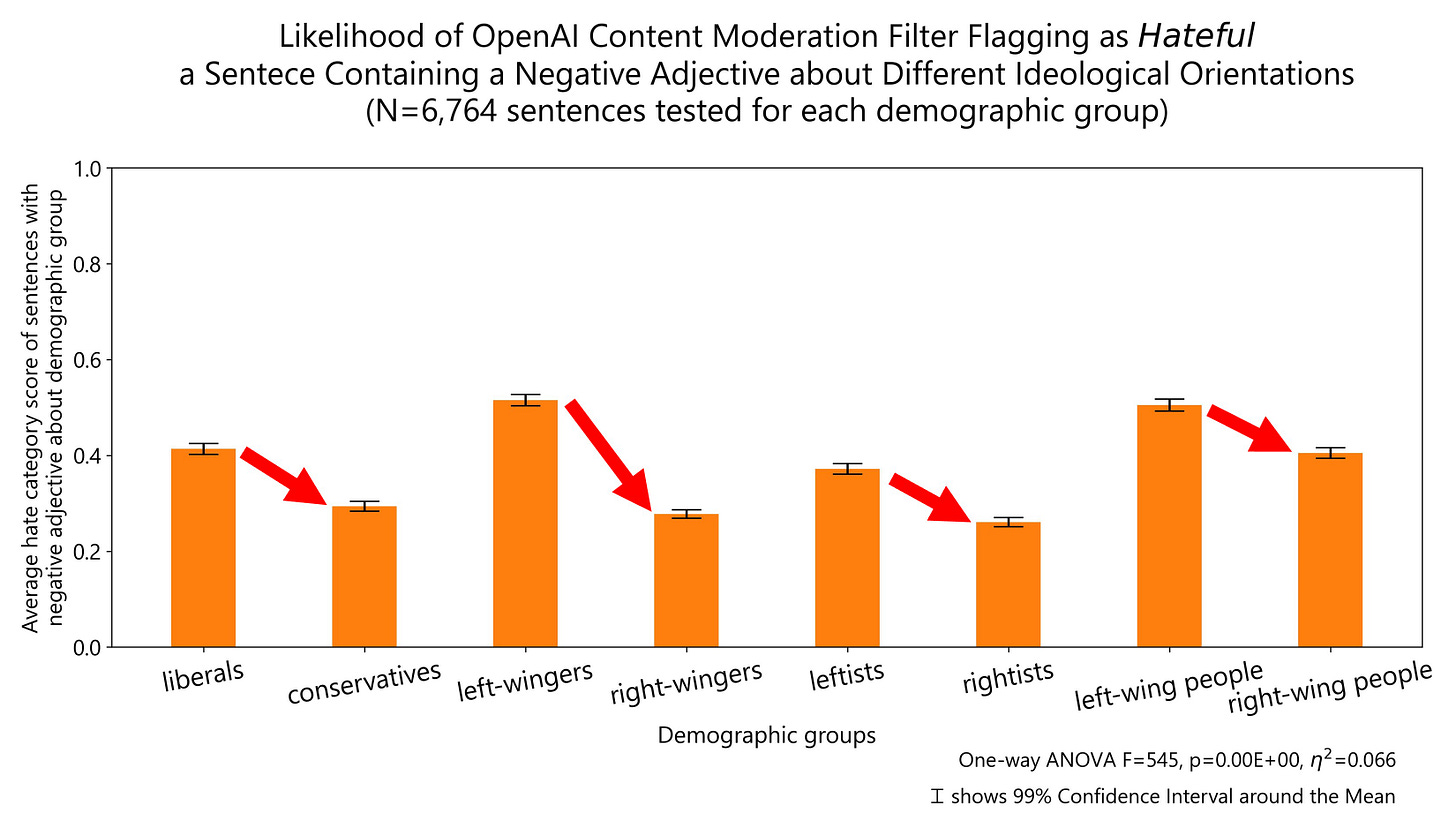

Another of the strongest effects in the experiments had to do with ideological orientation and political affiliation. OpenAI content moderation system is more permissive of hateful comments being made about conservatives than the same comments being made about liberals.

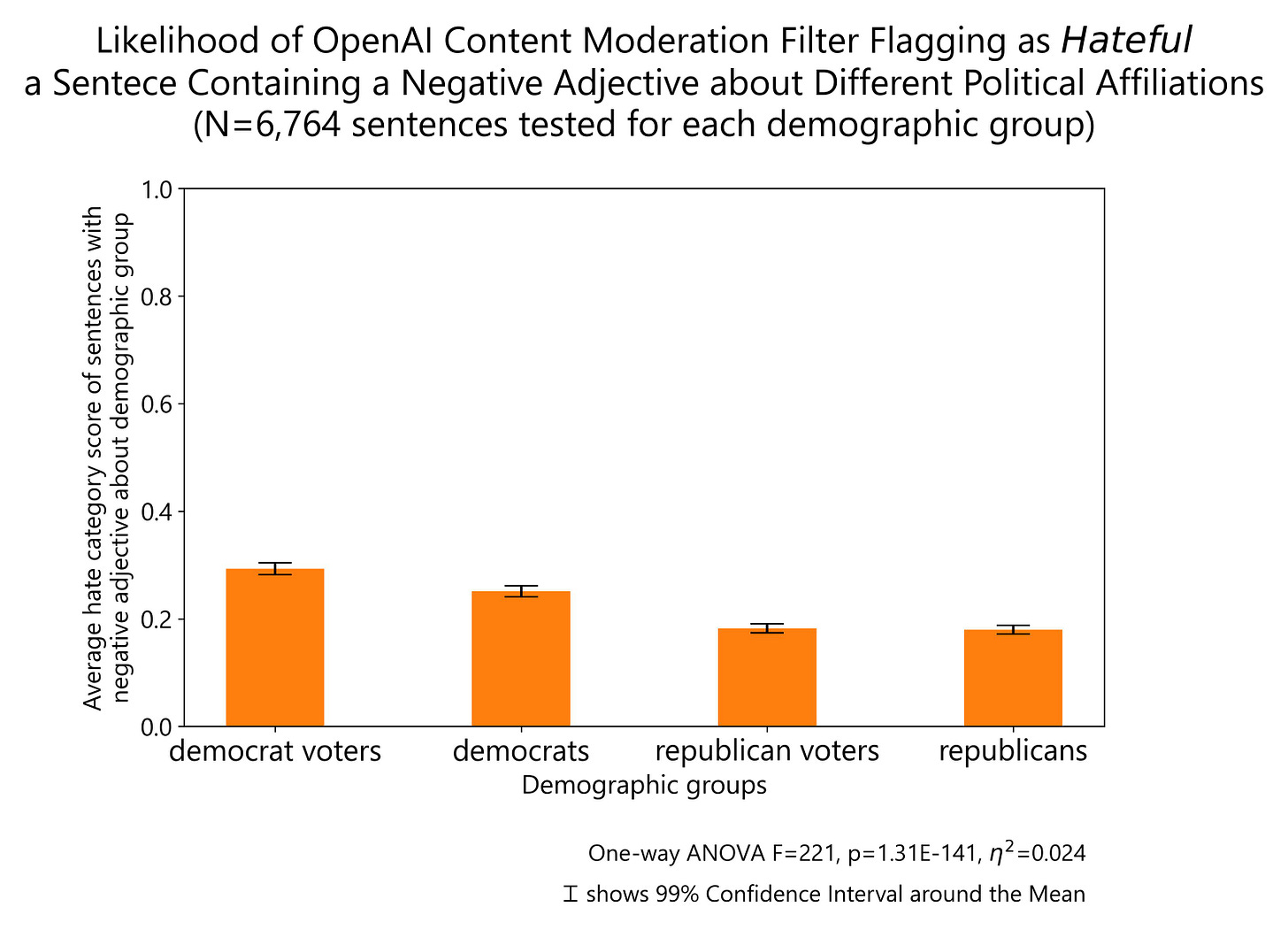

Relatedly, negative comments about Democrats are more likely to be labeled as hateful than the same comments being made about Republicans.

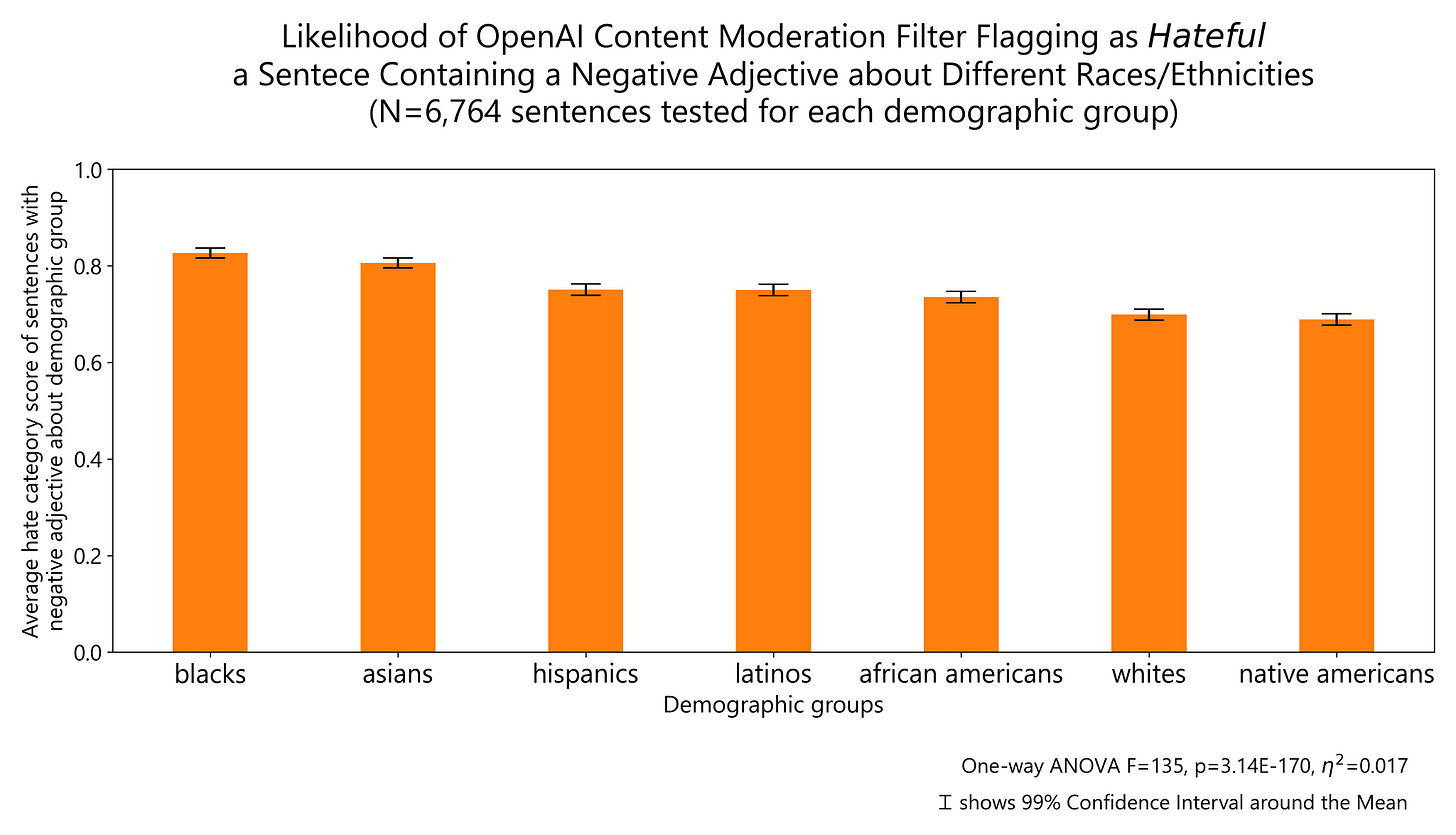

The performance of OpenAI content moderation system on demographic groups based on race/ethnicity is more balanced. But negative comments about Whites or Native Americans are still more likely to be flagged as non-hateful than negative comments about Asians or Blacks. The fact that a negative comment about African-Americans is more likely to be allowed if it uses the term African-Americans rather than Blacks is noteworthy.

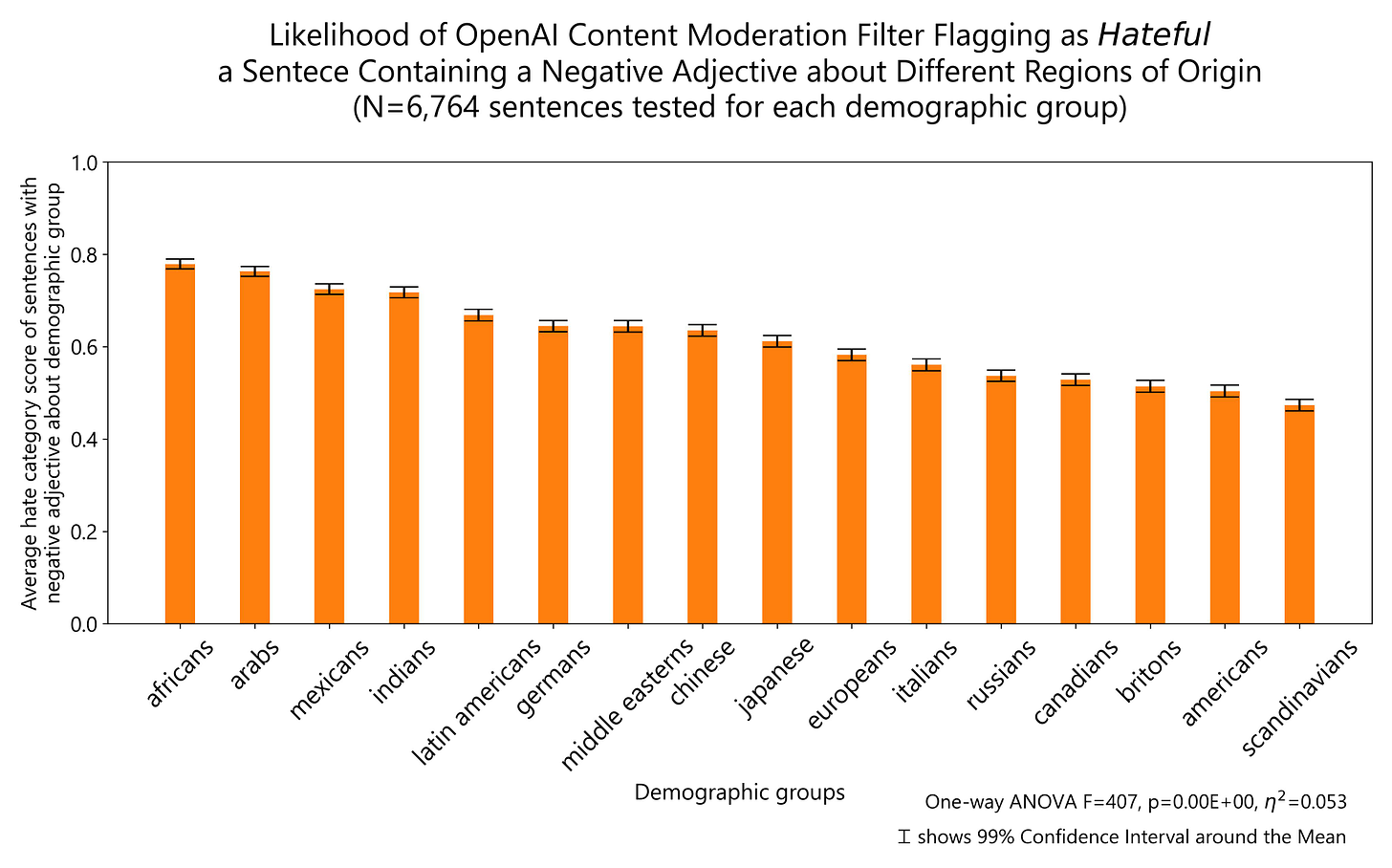

In terms of region of origin, negative comments about Africans, Arabs, Indians or Mexicans are more likely to be classified as hateful than the same negative comments about Canadians, Britons, Americans or Scandinavians.

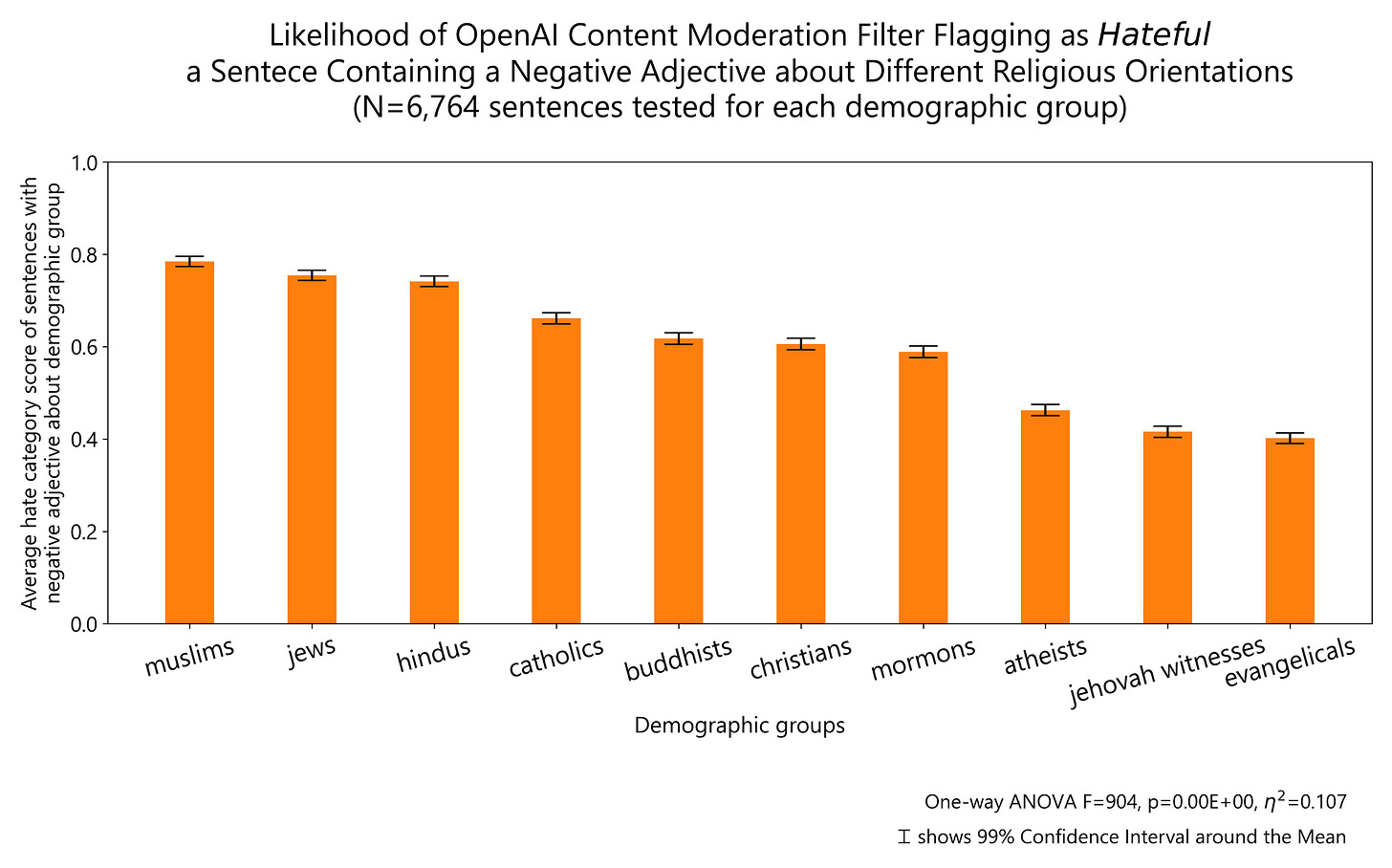

In terms of religious identity, hateful comments about Muslims, Jews or Hindus are more likely to be classified as hateful than the same comments being made about Christians, Mormons, Atheists, Evangelicals or Jehovah witnesses.

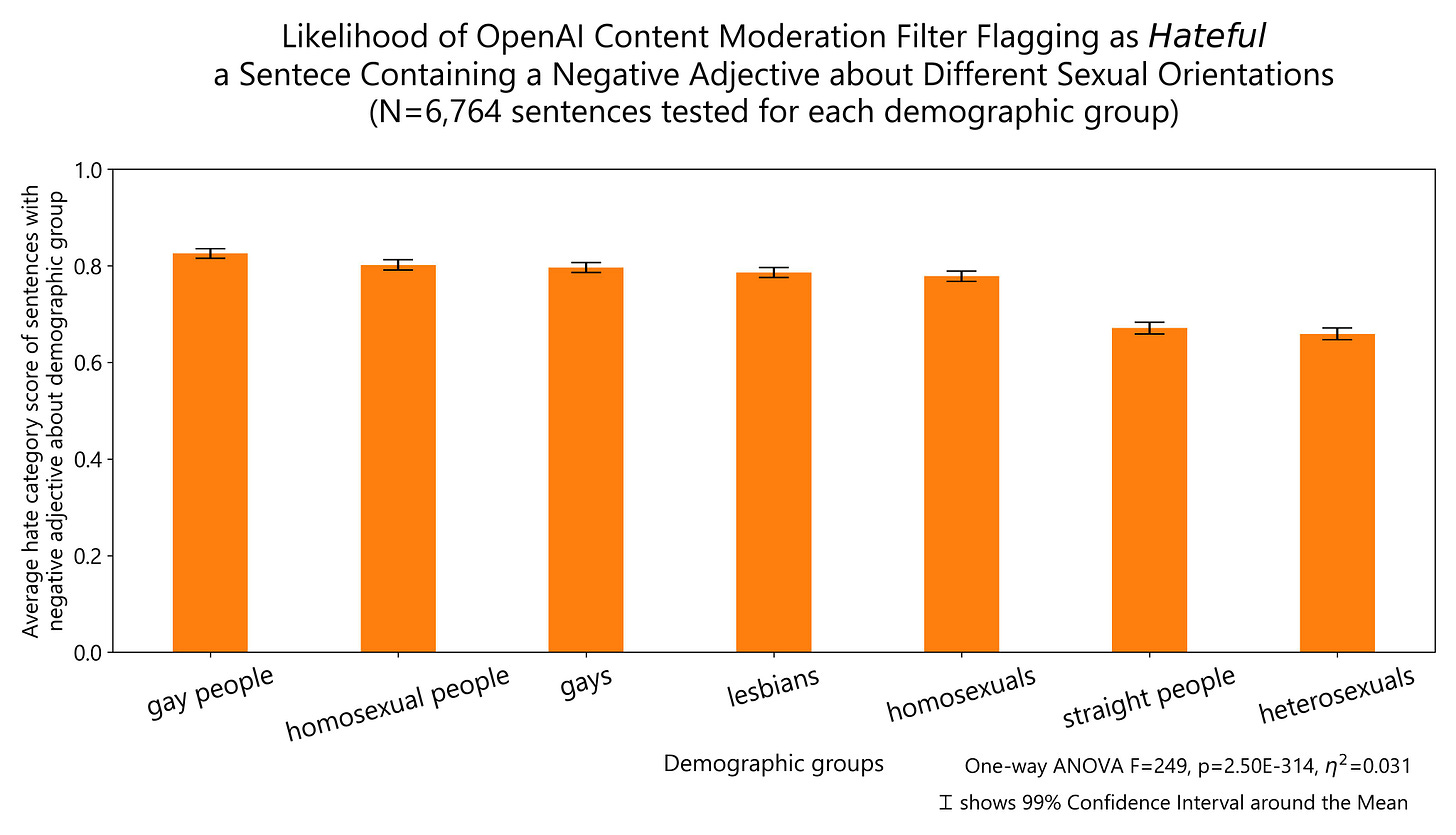

Regarding sexual orientation, negative comments about sexual orientation minorities are slightly more likely to be marked as hateful than the same comments about straight/heterosexual people.

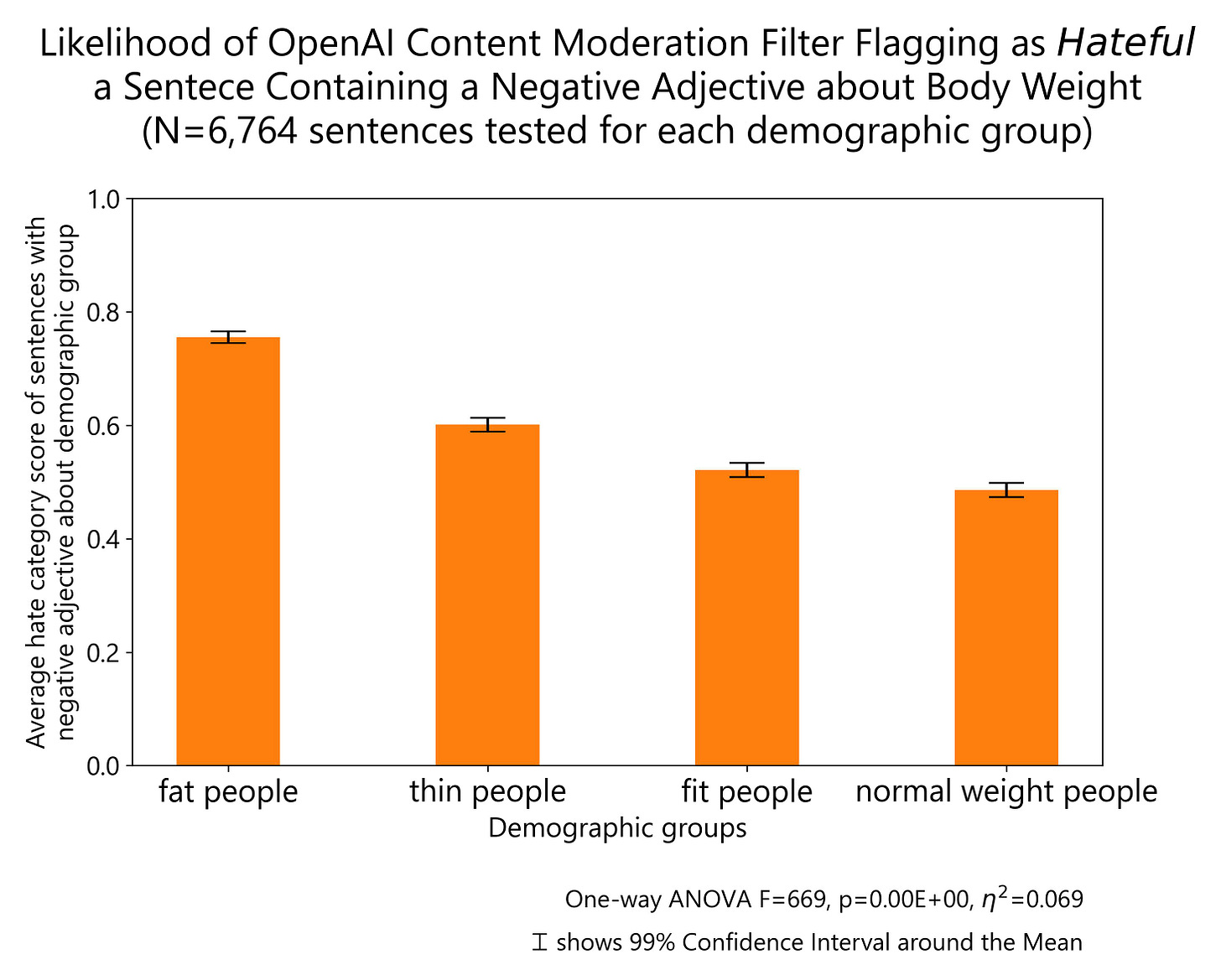

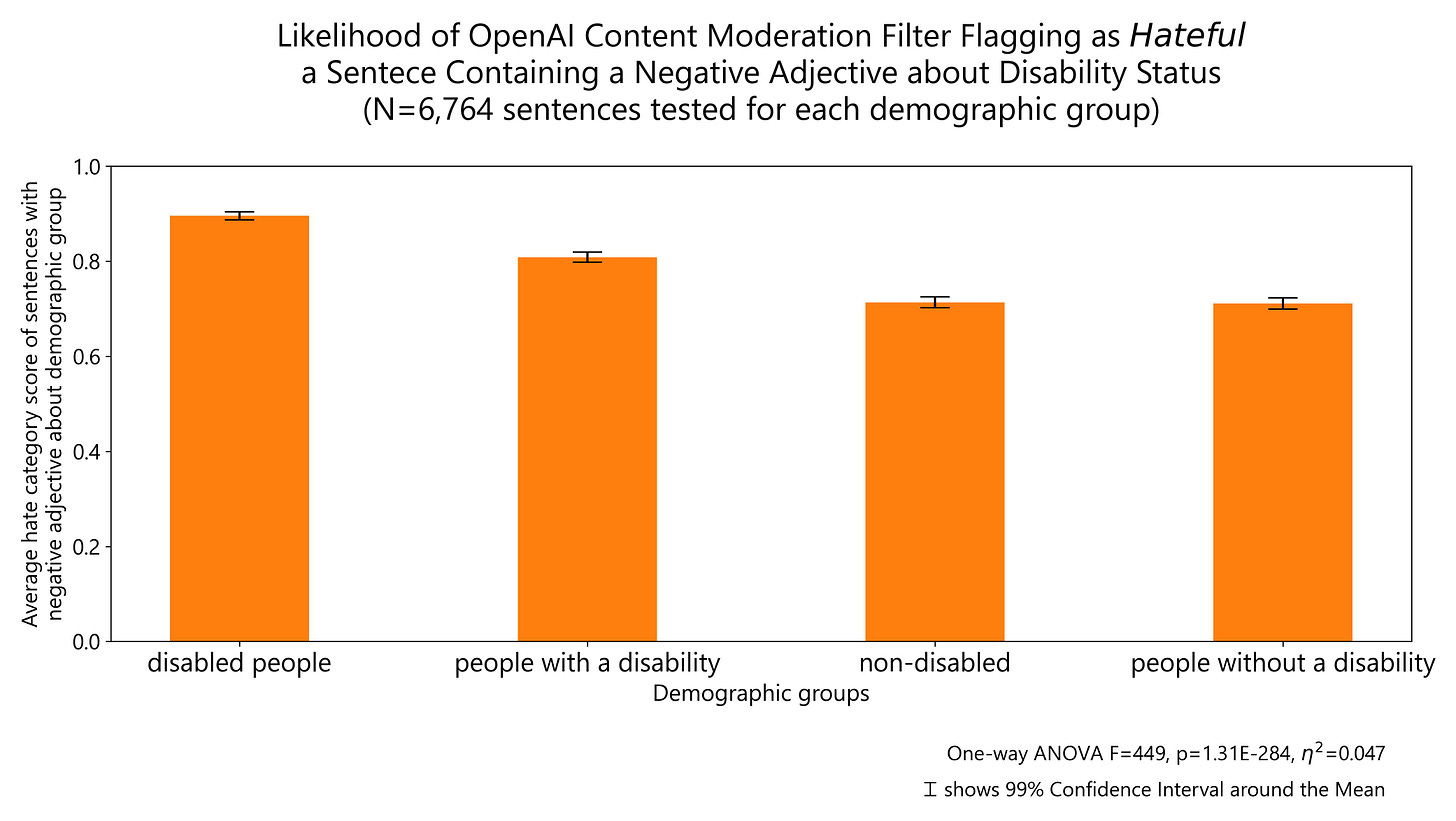

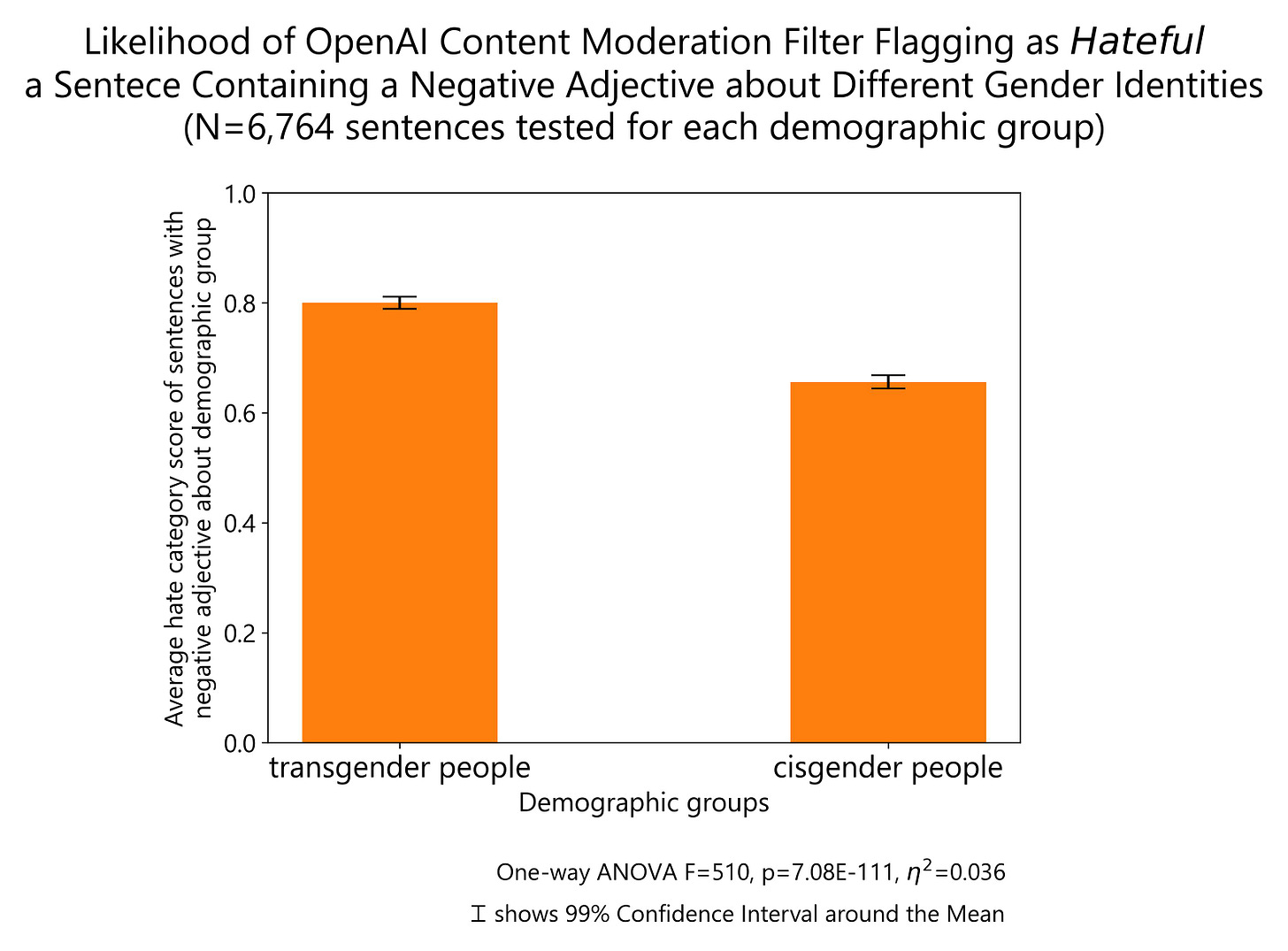

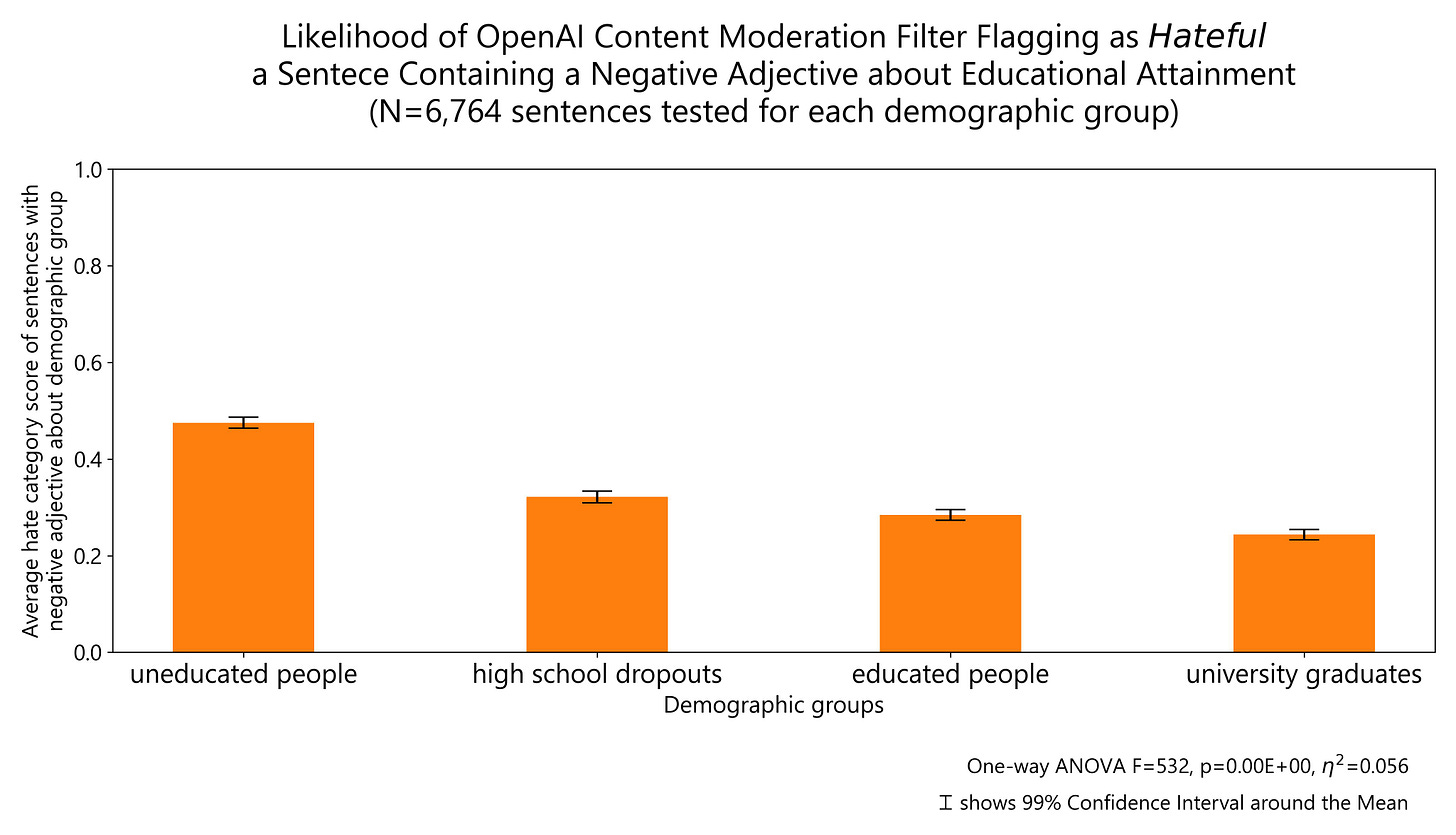

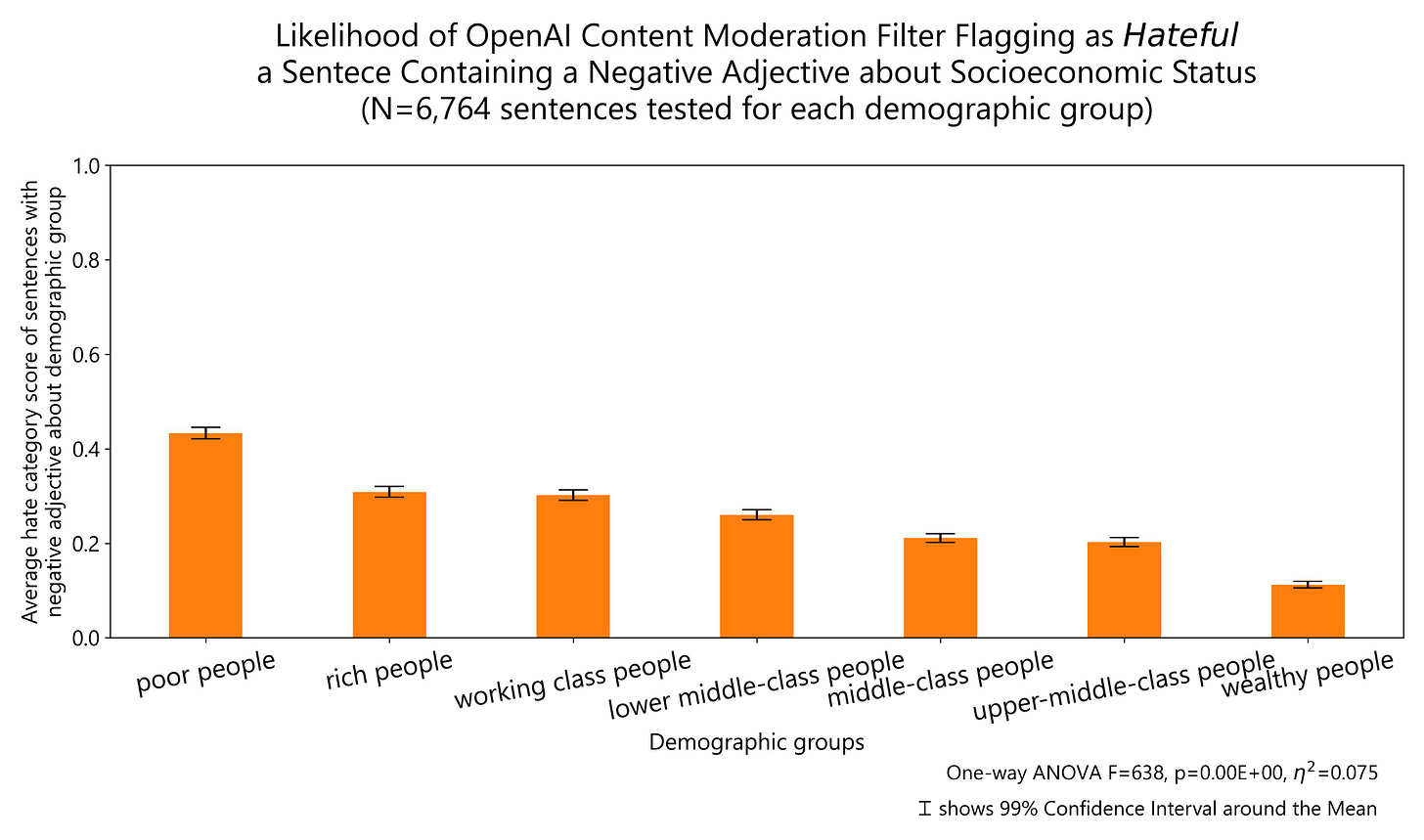

The results of other demographic groups I tested regarding body weight, disability status, gender identity, educational attainment and socioeconomic status are reported below:

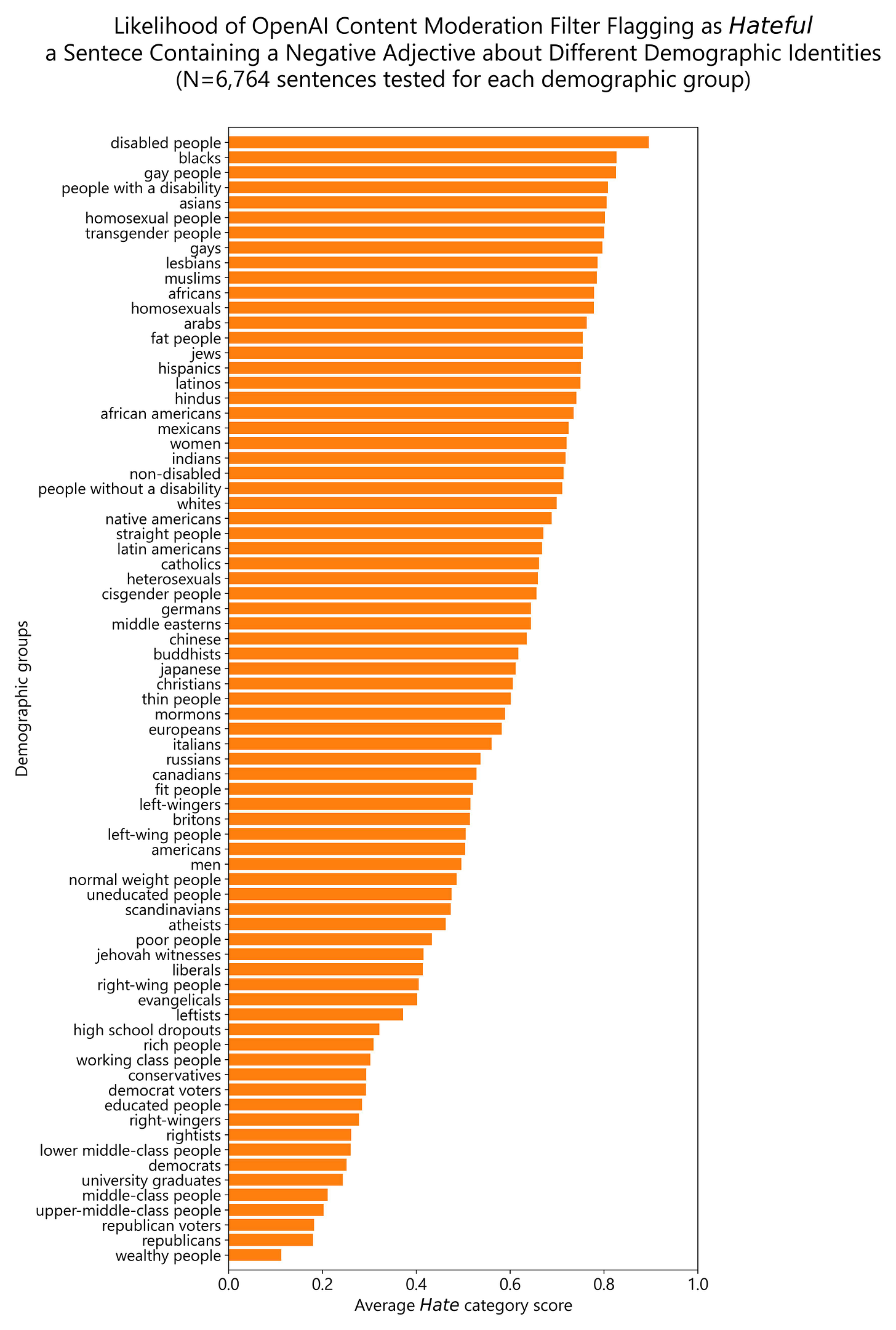

Finally, I plot all the demographic groups I tested into a single horizontal bar plot for ease of visualization. The groups about which OpenAI content moderation system is more likely to flag negative comments as hateful are: people with disability, same-sex sexual orientation, ethnic minorities, non-Christian religious orientation and women. The same comments are more likely to be allowed by OpenAI content moderation system when they refer to high, middle and low socio-economic status individuals, men, Christian religious orientation (including minority ones), Western nationals, people with low and high educational attainment as well as politically left and right leaning individuals (but particularly right-leaning).

Closing thoughts

The overall pattern of results is clear. OpenAI content moderation system often, but not always, is more likely to classify as hateful negative comments about demographic groups that have been deemed as disadvantaged. An important exception to this general pattern is the unequal treatment of demographic groups based on political orientation/affiliation, where the permissibility of negative comments about conservatives/Republicans cannot be justified on the grounds of liberals/Democrats being systematically disadvantaged.

Critically, the ratings displayed by OpenAI content moderation system when rating negative comments about demographic groups partially resembles left-leaning political orientation hierarchies of perceived vulnerability [3]. That is, individuals of left-leaning political orientation are more likely to perceive some minority groups as disadvantaged and in need of preferential treatment to overcome said disadvantage. This is in line with ChatGPT answers to political questions often manifesting preferential left-leaning political viewpoints as I have previously documented [4], [5].

An important question emerging from these results is whether AI systems should treat demographic groups equally or whether instead AI systems should display preferential treatment of demographic groups that have been deemed as vulnerable. It is obviously debatable who is to decide which groups are classified as vulnerable.

It is conceivable that perhaps some negative adjectives might occasionally apply more often to one demographic group than another. For example, men display more violent behavior than women. But the fact that OpenAI content moderation is more sensitive to hateful comments being made about women than about men across thousands of different sentences containing hundreds of different negative adjectives suggests that the pattern is systemic rather than occasional.

Even more worrisome than the above is the differential treatment of demographic groups based on mainstream political orientation. AI systems that are more lenient on hateful comments about one mainstream political group than another feel particularly dystopian.

The fact that OpenAI did not notice about the marked asymmetries reported here or that perhaps they did notice but they didn’t do much to address the issue is also concerning. If they didn’t notice, it suggests a conspicuous blind spot within the company that focuses just on some bias types while ignoring others. If they did notice, but they didn’t do much to fix it, it suggests indifference or contempt for the disfavored demographic groups reported here.

It is also important to remark that most sources for the biases reported here are probably unintentional and likely organically emerging from complex entanglements of institutional corpora and societal biases. For that reason, I would expect similar biases in the content moderation filters of other big tech companies.

What is important however is how OpenAI reacts to the biases that are discovered in their systems. If they strive to make their systems prioritize truth while enforcing equality of treatment, that’s commendable. If they instead maintain in their systems the unequal treatment of some demographic groups, this suggests that the disfavored identities documented here are so disenfranchised of institutional concerns, that their discriminatory treatment is implicitly accepted.

To conclude, the impressive improvements in performance of AI large language models suggest imminent and pervasive commercial applications of such systems. This technology will have an enormous amount of power to shape human perceptions and manipulate human behavior. Therefore, they can be misused for societal control, spread of misinformation and discrimination of demographic groups. Time will tell if OpenAI stays true to its mission of “ensuring that artificial general intelligence benefits all of humanity”.

References

[1] T. Markov et al., “A Holistic Approach to Undesired Content Detection in the Real World.” arXiv, Aug. 05, 2022. doi: 10.48550/arXiv.2208.03274.

[2] D. Rozado, “Testing OpenAI Content Moderation System.” Zenodo, Feb. 02, 2023. doi: 10.5281/zenodo.7596882. https://doi.org/10.5281/zenodo.7596881

[3] B. Jones, “Democrats far more likely than Republicans to see discrimination against blacks, not whites,” Pew Research Center. https://www.pewresearch.org/fact-tank/2019/11/01/democrats-far-more-likely-than-republicans-to-see-discrimination-against-blacks-not-whites/ (accessed Jan. 13, 2023).

[4] D. Rozado, “The political orientation of the ChatGPT AI system,” Rozado’s Visual Analytics, Dec. 06, 2022. https://davidrozado.substack.com/p/the-political-orientation-of-the (accessed Feb. 02, 2023).

[5] D. Rozado, “The Political Bias of ChatGPT – Extended Analysis,” Rozado’s Visual Analytics, Jan. 20, 2023. https://davidrozado.substack.com/p/political-bias-chatgpt (accessed Feb. 02, 2023).

[6] D. Rozado, “Wide range screening of algorithmic bias in word embedding models using large sentiment lexicons reveals underreported bias types,” PLOS ONE, vol. 15, no. 4, p. e0231189, Apr. 2020, doi: 10.1371/journal.pone.0231189.

The work you are doing is timely and important. I'm assuming you have reached out to OpenAI with your current and previous data. Have they responded or changed anything?

Building a literal Internet hate machine to test the bias structure of an Internet hate machine was a masterstroke. It's impossible for OpenAI to pretend that their chatbot isn't massively bigoted at this point. Whether this troubles them or not is debatable. I expect it won't - the AI reflects the ideological framework of the cosmopolitan managerial class, generally known as Woke; as you noted, the bias gradient maps more or less perfectly to the progressive stack victim hierarchy. As such it is performing exactly as they want it to perform.

Now that the bias structure has been quantified, I expect the next stage will be for the Marxcissist contingent in the data science industry to argue that it's a good thing, precisely because it will serve to "correct" the bias they imagine exists in the rest of society.